-

A Dataset Perspective on Offline Reinforcement Learning

-

Few-Shot Learning by Dimensionality Reduction in Gradient Space

-

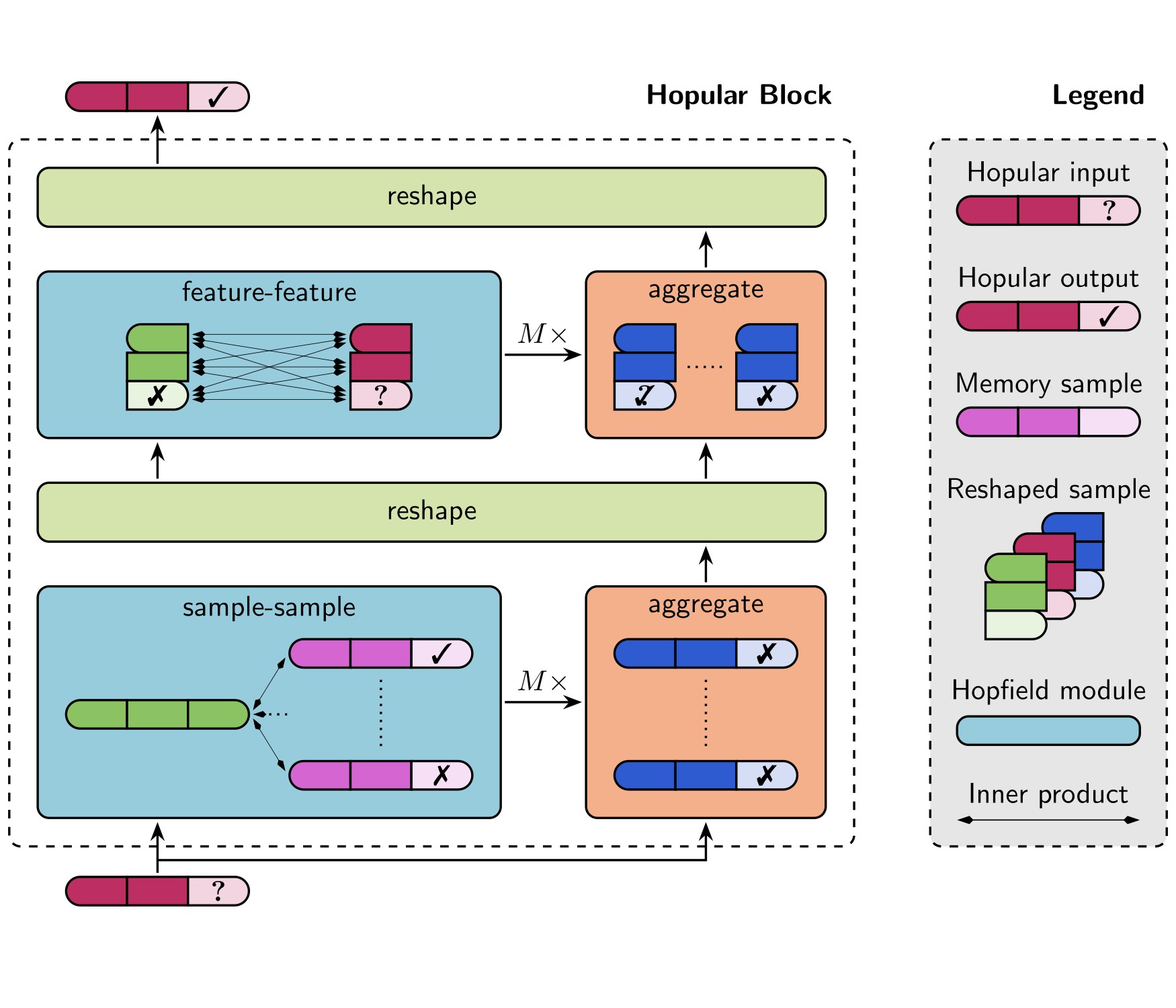

Hopular: Modern Hopfield Networks for Tabular Data

-

History Compression via Language Models in Reinforcement Learning

-

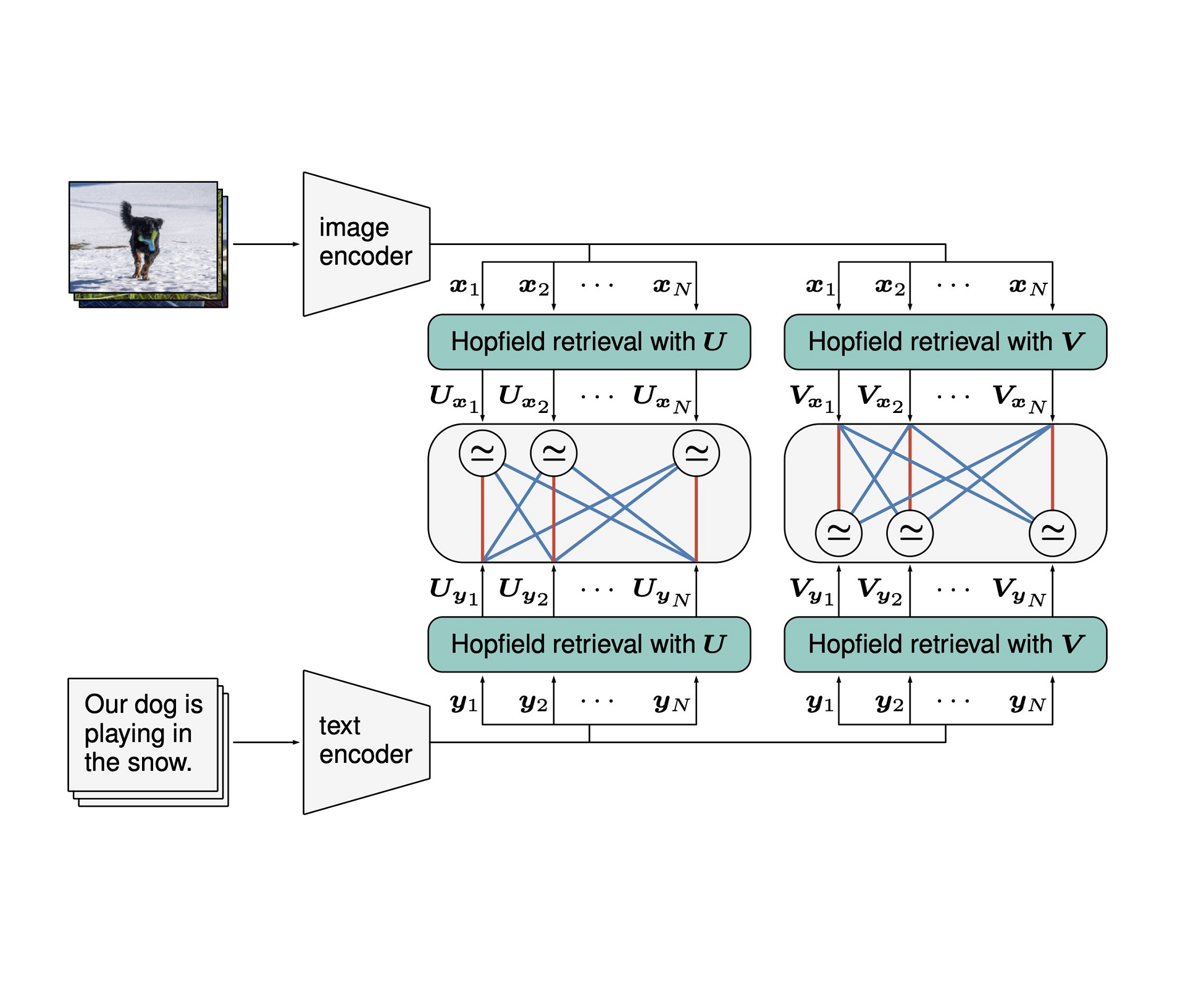

CLOOB: Modern Hopfield Networks with InfoLOOB Outperform CLIP

-

Boundary Graph Neural Networks for 3D Simulations

-

Looking at the Performer from a Hopfield point of view

-

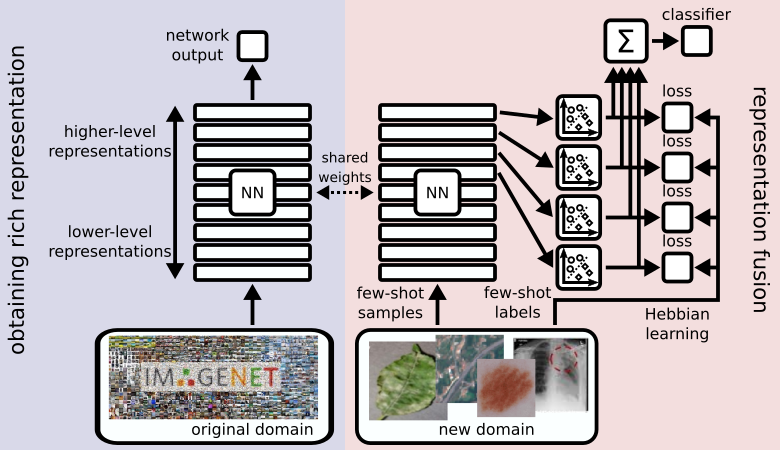

CHEF: Cross Domain Hebbian Ensemble Few-Shot Learning

-

Align-RUDDER: Learning from Few Demonstrations by Reward Redistribution

We present Align-RUDDER an algorithm which learns from as few as two demonstrations. It does this by aligning demonstrations and speeds up learning by reducing the delay in reward.

-

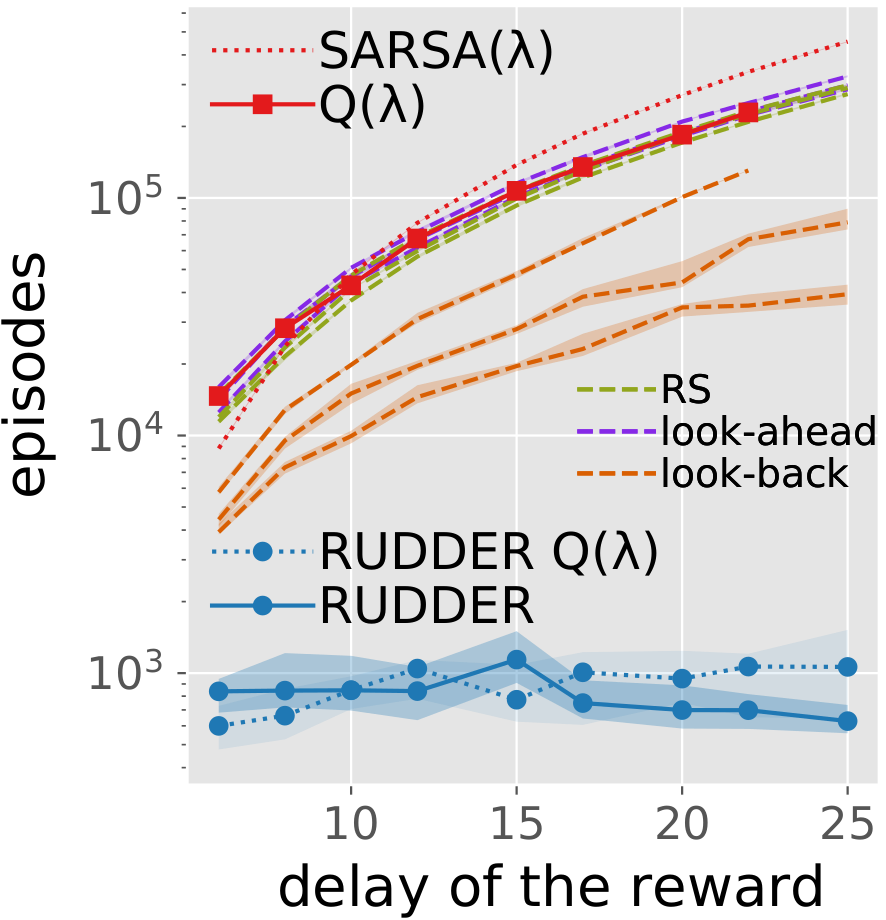

RUDDER - Reinforcement Learning with Delayed Rewards

-

Hopfield Networks is All You Need