The goal of autonomous driving ist to develop the capacity of sensing and moving through general traffic environments with little to no auxiliary inputs. Research on autonomous driving is gaining traction with the increasing availability of realistic simulation engines and large scale datasets. Due to its recent advancements ML and especially Deep reinforcement learning becomes increasingly important also for path planning and path plan improvements and for driving decisions.

Current self-driving systems already work well in simple scenarios (e.g. highways). However, driving in complex environments is still difficult. New challenges arise when the system has to consider the potentially combined occurrence of elaborate traffic conditions, pedestrian behavior, and unusual driving situations. The self-driving vehicles of the future need to be able to work under diverse environments and a wide variety of conditions (e.g. varying weather, uncontrollable behavior of other road users, movable traffic lights, etc.). Advancing the state of the art in autonomous driving lies at the heart of the research problem that our team at JKU investigates.

recent publications in AI 4 Driving:

Visual Scene Understanding for Autonomous Driving Using Semantic Segmentation

Hofmarcher, M.,

Unterthiner, T.,

Arjona-Medina, J.,

Klambauer, G.,

Hochreiter, S.,

and Nessler, B.

2019

Deep neural networks are an increasingly important technique for autonomous driving, especially as a visual perception component. Deployment in a real environment necessitates the explainability and inspectability of the algorithms controlling the vehicle. Such insightful explanations are relevant not only for legal issues and insurance matters but also for engineers and developers in order to achieve provable functional quality guarantees. This applies to all scenarios where the results of deep networks control potentially life threatening machines. We suggest the use of a tiered approach, whose main component is a semantic segmentation model, over an end-to-end approach for an autonomous driving system. In order for a system to provide meaningful explanations for its decisions it is necessary to give an explanation about the semantics that it attributes to the complex sensory inputs that it perceives. In the context of high-dimensional visual input this attribution is done as a pixel-wise classification process that assigns an object class to every pixel in the image. This process is called semantic segmentation.We propose an architecture that delivers real-time viable segmentation performance and which conforms to the limitations in computational power that is available in production vehicles. The output of such a semantic segmentation model can be used as an input for an interpretable autonomous driving system.

Patch Refinement - Localized 3D Object Detection

Lehner, J.,

Mitterecker, A.,

Adler, T.,

Hofmarcher, M.,

Nessler, B.,

and Hochreiter, S.

2019

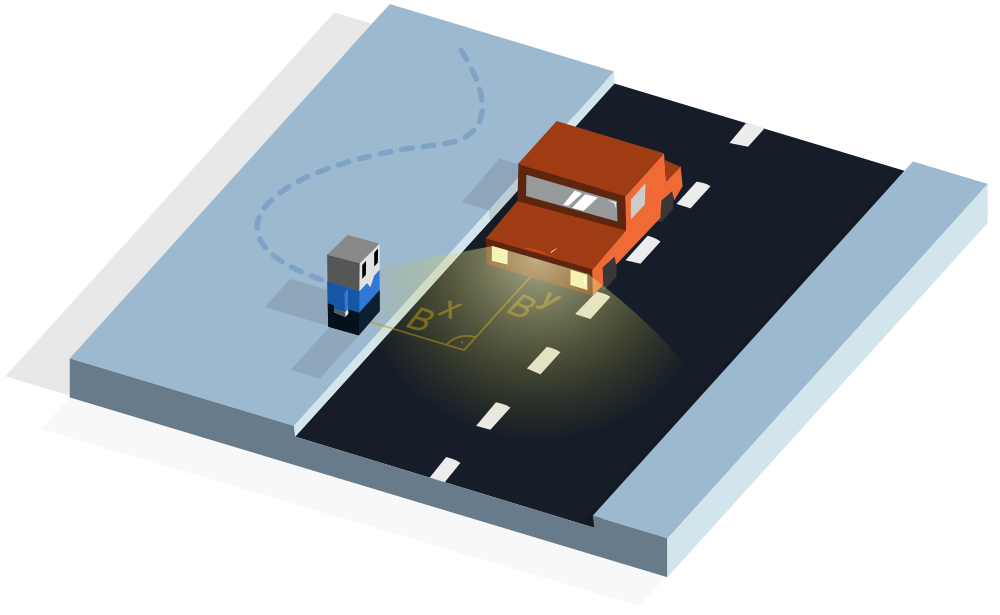

We introduce Patch Refinement a two-stage model for accurate 3D object detection and localization from point cloud data. Patch Refinement is composed of two independently trained Voxelnet-based networks, a Region Proposal Network (RPN) and a Local Refinement Network (LRN). We decompose the detection task into a preliminary Bird’s Eye View (BEV) detection step and a local 3D detection step. Based on the proposed BEV locations by the RPN, we extract small point cloud subsets ("patches"), which are then processed by the LRN, which is less limited by memory constraints due to the small area of each patch. Therefore, we can apply encoding with a higher voxel resolution locally. The independence of the LRN enables the use of additional augmentation techniques and allows for an efficient, regression focused training as it uses only a small fraction of each scene. Evaluated on the KITTI 3D object detection benchmark, our submission from January 28, 2019, outperformed all previous entries on all three difficulties of the class car, using only 50 % of the available training data and only LiDAR information.